Metaverse builders grapple with sex harassment conundrum

Nina Jane Patel felt confined and under threat as the male avatars closed in, intimidating her with verbal abuse, touching her avatar against her will and photographing the incident.

The abuse took place in a virtual world but it felt real to her, and this kind of story is causing severe headaches for architects of the metaverse -- the 3D, immersive version of the internet being developed by the likes of Microsoft and Meta.

"I entered the shared space and almost immediately three or four male avatars came very close to me, so there was a sense of entrapment," Patel told AFP.

"Their voices started verbally and sexually harassing me, with sexual innuendos," said the London-based entrepreneur.

"They touched and they groped my avatar without my consent. And while they were doing that, another avatar was taking selfie photos."

Patel, whose company is developing child-friendly metaverse experiences, says it was "nothing short of sexual assault".

Her story and others like it have prompted soul-searching over the nature of harassment in the virtual world, and a search for an answer to the question: can an avatar suffer sexual assault?

- Tricking the brain -

"VR (virtual reality) relies on, essentially, tricking your brain into perceiving the virtual world around it as real," says Katherine Cross, a PhD student at the University of Washington who has worked on online harassment.

"When it comes to harassment in virtual reality -- for instance, a sexual assault -- it can mean that in the first instant your body treats it as real before your conscious mind can catch up and affirm this is not physically occurring."

Her research suggests that despite the virtual space, such victimisation causes real-world harm.

Underlining this point, Patel explained that her ordeal did briefly continue outside of the constructed online space.

She said she eventually took off her VR headset after failing to get her attackers to stop but she could still hear them through the speakers in her living room.

The male avatars were taunting her, saying "don't pretend you didn't like it" and "that's why you came here".

The ordeal took place last November in the "Horizon Venues" virtual world being built by Meta, the parent company of Facebook.

The space hosts virtual events like concerts, conferences and basketball games.

The legal implications are still unclear, although Cross suggests that sexual harassment laws in some countries could be extended to cover this type of act.

- Protective bubbles -

Meta and Microsoft -- the two Silicon Valley giants that have committed to the metaverse -- have tried to quell the controversy by developing tools that keep unknown avatars away.

Microsoft has also removed dating spaces from its Altspace VR metaverse.

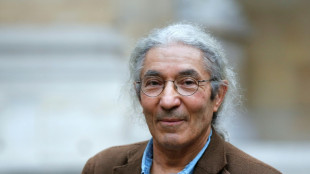

"I think the harassment issue is one that will actually get resolved because people will self-select which platform they use," says Louis Rosenberg, an engineer who developed the first augmented reality system in 1992 for the US Air Force research labs.

The entrepreneur, who has since founded a company specialising in artificial intelligence, told AFP he was more concerned about the way companies will monetise the virtual space.

He says a model based on advertising is likely to lead to companies capturing all kinds of personal data, from users' eye movements and heart rate, to their real-time interactions.

"We need to change the business model," he says, suggesting that safety would be better protected if funding came from subscriptions.

However, tech companies have made themselves fantastically wealthy through a business model based on targeted advertising refined by vast streams of data.

And the industry is already looking to get ahead of the curve by setting its own standards.

The Oasis Consortium, a think tank with ties to several tech companies and advertisers, has developed some safety standards it believes are good for the metaverse era.

"When platforms identify content that poses a real-world risk, it's essential to notify law enforcement," says one of its standards.

But that leaves the main question unresolved: how do platforms define "real-world risk"?

H.Klein--MP